In a captivating three-day seminar on Explainable Artificial Intelligence (XAI), Dr. Sebastian Lapuschkin, shed light on pivotal aspects shaping the future of AI. Hosted over the past three days, the seminar has been a beacon of knowledge and exploration into the intricate realm of XAI.

Day 1: Establishing the Imperative of XAI

Dr. Lapuschkin’s inaugural lecture set the stage by elucidating the dire necessity of XAI within the contemporary landscape of AI. With a comprehensive overview, attendees gained insights into the diverse dimensions of Explainability and Interpretability. This foundational session fostered an understanding of the field’s terminology, laying a robust groundwork for the subsequent discussions.

Day 2: Unveiling Local Explainability

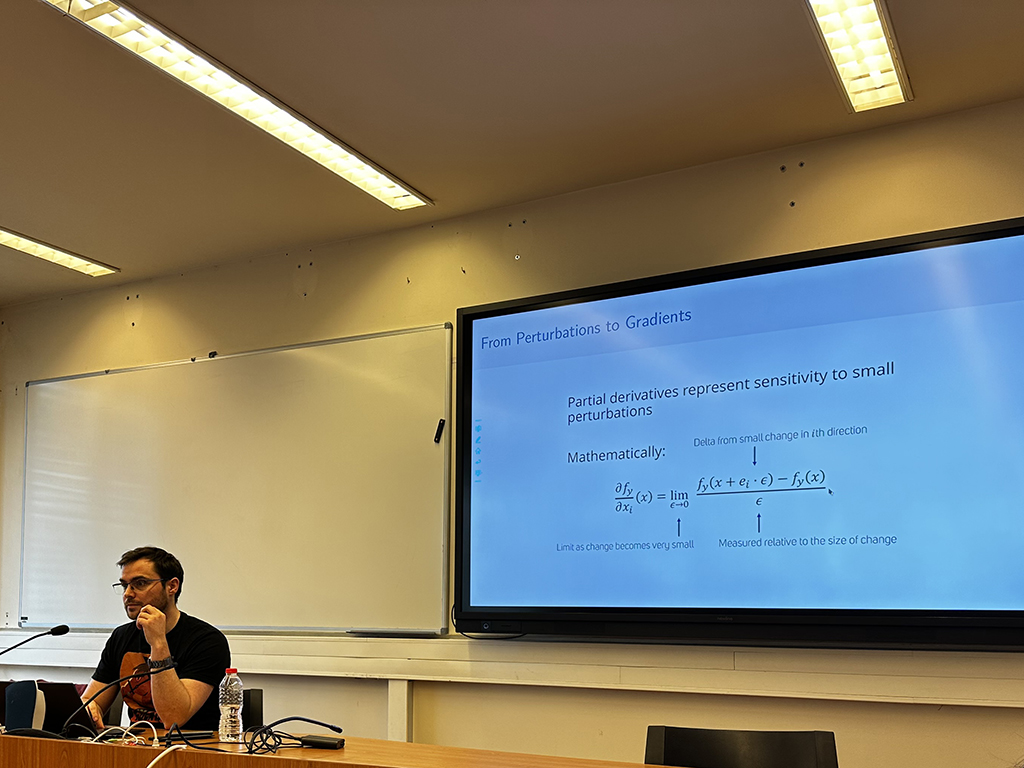

On the second session delved deep into the technicalities of local Explainability, focusing on unraveling the decision-making process of AI models at a granular level. Dr. Lapuschkin not only elucidated various influential XAI approaches but also engaged the audience interactively. Collaboratively, attendees explored the practical implementations of these methodologies through interactive Python notebooks. The hands-on approach provided invaluable insights into understanding how AI models arrive at individual decisions.

Day 3: Navigating XAI Evaluation

The last session was a compelling exploration into XAI Evaluation. Dr. Lapuschkin tackled questions, such as quantifiably evaluating explanations and leveraging them to measure model behavior beyond mere performance metrics. Additionally, he offered valuable insights into the current state-of-the-art approaches to AI explainability.

Dr. Lapuschkin’s seminar has not only demystified the complexities of XAI but has also sparked discussions on its practical applications and future advancements.